The Randall Museum in San Francisco hosts a large HO-scale model model railroad. Created by the Golden Gate Model Railroad Club starting in 1961, the layout was donated to the Museum in 2015. Since then I have started automatizing trains running on the layout. I am also the model railroad maintainer. This blog describes various updates on the Randall project and I maintain a separate blog for all my electronics not directly related to Randall.

2020-06-14 - “Train Motion” Video Display Project at Randall

Category RandallHere’s what’s been keeping me busy for the past two weeks at home:

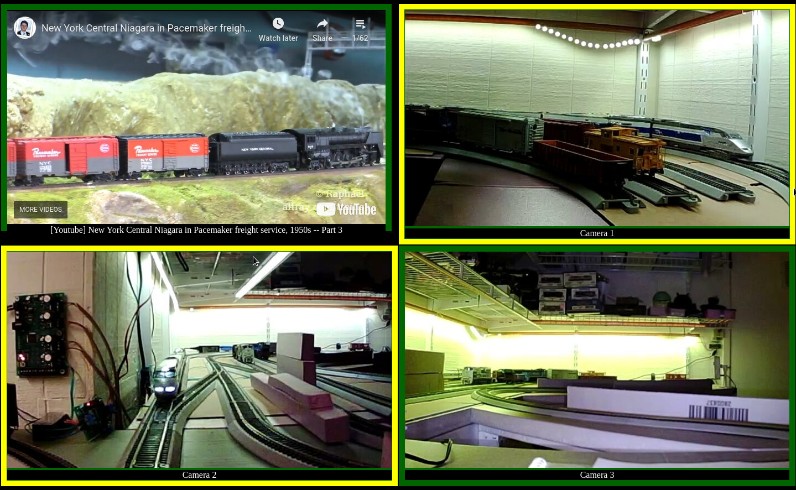

“Vision” a.k.a. Train Motion (source, MIT license) runs full screen on a laptop and plays a series of Youtube videos of the model railroad in a loop. Three cameras point to the layout and, when activity is detected on these cameras, the view changes to a matrix displaying both the Youtube video as well as the three layout cameras, showing whatever train is going by. Once the train is gone, the Youtube video plays full screen again.

Visitors get to enjoy an interactive display -- either a curated series of pre-recorded videos, or live action of the trains running on the model railroad.

Here is a video demonstration of “Train Motion” in action at Randall:

Presentation video (Subtitles are available, use the [CC] button on the video to see them).

The video monitor is located behind one of the public windows so that the young public can watch the trains -- recorded or live, as they happen.

Here is a screenshot of it on my makeshift test track at home, before I was able to test it at the museum:

The sections “Cameras 1, 2, 3” are the live cameras. They are shown with a yellow frame when motion is detected.

So how does that work?

I use three IP cameras connected over wifi.

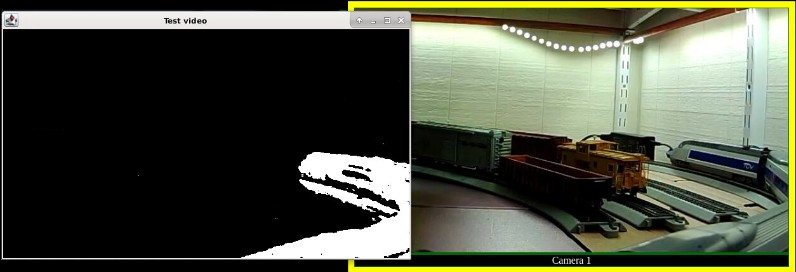

I wrote a Java-based program to handle this. Train Motion uses the FFmpeg API to fetch the cameras’ h264 feeds. OpenCV is used to do basic background subtraction using a MOG2 algorithm followed by a MedianBlur filter to remove noise. This very effectively separates the motion from the static background, as can be seen in the example output below:

Train Motion also incorporates a Jetty web server and provides the main Javascript display.

The Train Motion source is available at bitbucket.org/model-railroad/vision under a MIT License.

The laptop runs Linux with Chromium in kiosk mode to display the output full screen with both a Youtube viewer and three MJPEG viewers.

Train Motion decodes the input streams from the cameras, runs the motion analysis and serves the results via a JSON REST, and then re-encodes the video into local streams to display them in Chromium. Videos served locally use MJPEG streams as it turned out to be less CPU intensive.

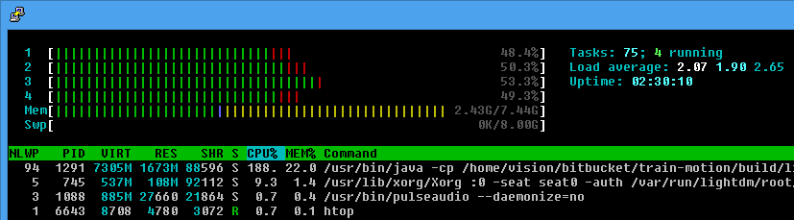

I currently run all of this on my personal old Lenovo T410 laptop, which is an i5 gen 1 processor, and the 4 cores manage to process three 640x480 @ 15 fps feeds, all using around 70% CPU level.

I originally targeted 1280x720 @ 24 fps however the laptop was not able to process that properly. Yet more importantly, I quickly realized the laptop has a 1440 px wide screen, which is thus ideal to display two 640 videos side by side (1280 px vs 1440). When running at 1280x720, not only does the CPU have four times more pixels to process, but at the end it would still need to shrink the video thus negating any benefit of the higher pixel size & CPU work.

There are some technical choices here I took voluntarily, and are just shortcuts “because it’s version 0.1”. The whole project is an early prototype, and I’m listening to feedback. I’ve got many suggestions already when demonstrating this, which is good.

On a more technical level, one may logically question the decoding/re-encoding part. My original intent was to pipe through the h264 feed directly to Chromium via the web server. However wifi IP cams can be unreliable and tend to disconnect/reconnect. To simplify, the mjpeg streamer provides a constant feed to the Chromium views -- if a camera disconnects, it just keeps sending the last frame while trying to reconnect to the camera. It’s also useful when starting as the Chromium page starts pulling the stream before the cameras have time to boot -- the goal is that all of this will eventually be installed on the layout at the museum, to turn on automatically when the room’s electrical is turned on in the morning. Everything needs to start up automatically.

One part that can use more work is the motion detection. I tried a couple algorithms available in OpenCV. There are others, although some are more fancy yet require more frames or more CPU. Another hype I’m avoiding here is dealing with AI or ML stuff. Background subtraction algorithms are not exactly a new thing, although they keep improving all the time. No fancy machine learning here. We’re just comparing pixels the traditional way. It’s important to realize that the detection does not know what is changing -- this isn’t about detecting “there’s an engine moving on track #3”. It’s just about detecting that something has changed in the picture, and here’s a blob of pixels representing that change. And the core “is there motion” is just a basic threshold comparator on that number of pixels overall, not even their location, just their count.

Coming back to the video stream quality, sometimes less is really more, and an “HD” feed is really not necessary here with this equipment. One option at the museum would be to display on a larger monitor or a larger LCD TV panel, in which case running at 1280x720 would have some value. I’d need a more beefy machine, or optimize a lot more. I am fairly sure we could squeeze a bit more performance out of that program -- since it is essentially a quick throw-away experiment after all.

Overall I’m very satisfied with what I got out of what some people would consider to be obsolete equipment.